Collective Intelligence

How ideas come together to help us see the whole together, where people are continually learning how to learn together, where collective aspiration is set free.

How ideas come together to help us see the whole together, where people are continually learning how to learn together, where collective aspiration is set free.

The central, or narrow, definition of collective intelligence is that it is an "emergent property" between people, or perhaps a way of processing ideas (data) that generates aggregate or emergent ideas. It "arises" from individual interactions, but is not predictable from individual thoughts alone.

From there, we start down a path of different interpretations and questions. Can collective intelligence happen with two people? How many people do you need? Is there a threshold of how many individual thoughts you need before collective intelligence can surpass the abilities of individuals?

How does it happen? Can we make it happen? Does it surpass individual abilties? How? Why?

Collective intelligence is "one of those things" - one of those loaded phrases full of unpacked and assuming ideas, thrown around because they sound good. Because they sound like the right thing to say. They feel important.

We need to be more inclusive. We need to foster diversity. Environmental and social sustainability are our key drivers. We believe in ethical leadership.

When you hear or read stories that use these phrases, they often have something thing in common - they rarely come with an explanation of how or why they work. It feels right to say diversity or ethics are important and are good for an organization. There are strong arguments for equality and diversity from a humanism or human rights perspective. Equality for equality's sake, equal opportunity, social justice, fairness - which may actually be more central or important than the concept of equality in context of organizational growth and change. But that's a whole other article.

The question is what do things like inclusion, diversity, fairness - or collective intelligence - actually do for an organization?

You can draw a fairly straight line to employee engagement, happiness and retention - statistically and logically. For example: "Women are more likely to express interest in an organization and perceive it as fair when women are highly represented in top management positions". Having people you share traits with, be it gender, culture, history to name just a few - having people you share traits with in your workplace can make it easier to feel like you belong, to make a connection, to create a sense of psychological safety. It's not a guarantee or a necessity, but it's significant.

Psychological safety is another one that, like fairness, really seems to get to the central question. Google’s Project Aristotle found that psychological safety, where team members feel safe to take risks and be vulnerable in front of each other, was the most important factor in successful teams. Feeling like a valued member of a team contributes to this sense of psychological safety.

Google's Project Aristotle, initiated in 2012, was a comprehensive study aimed at understanding the dynamics of effective team building within the organization. This two-year-long project involved the examination of 180 teams at Google. The primary focus was to discern why some teams succeeded while others did not, and the findings were quite revealing.

One of the key discoveries from Project Aristotle was that the composition of a team or the individual capabilities of its members were less crucial than how the team members interacted, structured their work, and viewed their contributions. The research identified five key traits shared by successful teams. Psychological safety was the clear #1.

Seeing people you share traits with in positions of power, leadership and success has another impact. It can create a sense of opportunity, a motivation or reason to try. If you were told you had to run AND finish marathon to get paid - but you were not allowed to win or even place in the top ten. Would you run so hard that you puked to win anyway? Or would you run just enough to finish? This is where the idea of fairness matters. Fairness creates opportunity which can impact drive and competition. It's not a guarantee or a necessity, but it's significant.

So there seem to be some fairly straight lines from inclusion and diversity to employee retention and engagement. In addition to being good for the individuals being included are the tangible HR and productivity benefits to an organization. Those may be enough on their own to warrant policy, cultural and leadership shifts. But retention and engagement do not necessarily make an organization "smarter".

We see a lot of circular reasoning like: "4 Reasons Why Ethical Leadership in Business is Essential", reason #4: because Ethical Leadership is Good for Business (source). Some people might believe in being ethical. Some people might say a lot of wins can come from playing dirty - especially in business and sports.

Research like this can show the links from diveristy and inclusion to retention, engagement, happiness, but claims like "Diverse Teams Are Critical for Innovation" fall short of demonstrating how, or why, inclusive business cultures generate increased creativity or innovation. Attribution is a difficult thing. It is difficult to show that diversity improves innovation when other internal forces are pushing for innovation and external forces necessitate it. It is difficult to show that innovation not have happened, or would not have happened as much, if the organization was "less diverse". There are so many factors at play in an organization, it's easy to make a correlation, but that does not imply causation.

We can say both are happening without any proof that one is causing the other. You can't say it isnt related either, though, when there's plenty of data and anecdotal proof. It would just be nice to understand the dynamics to show how the connection works.

Other changes in a company might be helping or hindering the innovative thinking. What kinds of businesses are being compared? What was happening in the marketplace? Was the company growing? How did they measure creativity and innovation? Were these being measured before the inclusive business cultures and policies were put in place?

If inclusion can improve productivity, for example, how does that make an organization "smarter"? If you have a bad strategy or make bad decisions, how valuable is that level of engagement? What happens if you've got everyone rowing really hard but some of them are rowing in the wrong direction?

If we want to make a cohesive argument that diversity, inclusion, fairness, psychological safety (and more) lead to better ideas, there is a piece of this story missing. Yes - they are great for individuals. Yes - they are good for employee retention and engagement. But if we want to say they generate better ideas that lead to a higher likelyhood of success, or bigger/better/faster success, we need to connect these things to decision making, which connects us to collective intelligence. The idea that there is or can be an emergent "intelligence" from groups might explain how these things do make organizations smarter.

Let's look at a few of the more common variations of collective intelligence:

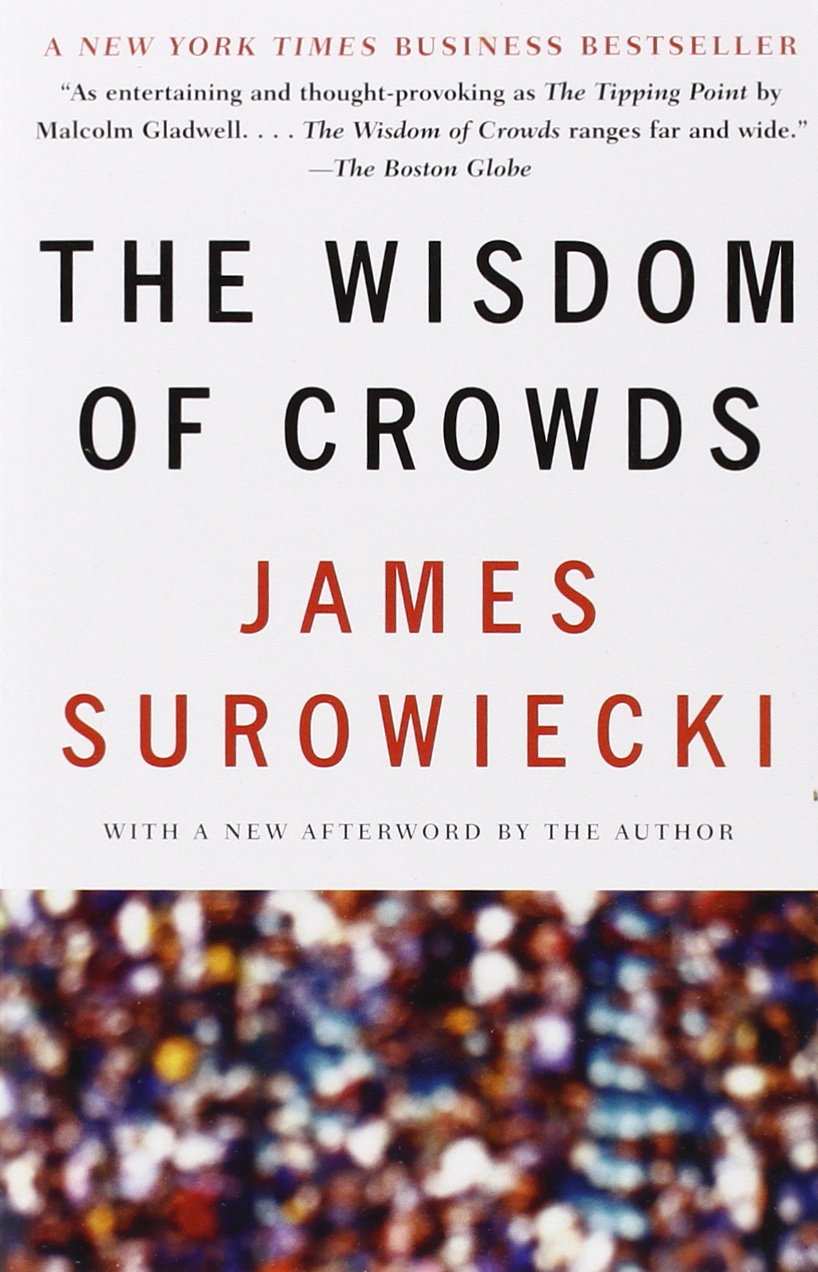

This conception is based on the idea that large groups of people can collectively make decisions or predictions more accurately than individuals or even experts. The concept, popularized by James Surowiecki in his book The Wisdom of Crowds suggests that under the right conditions, the aggregation of information in groups results in decisions that are often better than could have been made by any single member of the group.

This is a fun read with some of the history and foundations that provide a good conception of collective intelligence.

Often seen in open-source communities and online platforms, this focuses on the enhanced capacity created when people work together, often facilitated by technology. The collective abilities and/or output exceed the sum of individual contributions.

Technologies like git, and companies like GitHub, are good examples of this kind of collaborative intelligence. The sharing and merging of code through branching, pull requests, merging and issues/conversations is a literal idea-aggregation machine. It organizes people around an objective, certain design patterns and frameworks, while enabling people to explore and eventually commit code that enhances or extends the whole.

This concept is derived from the study of social insects, like ants, bees, and termites, which collectively manage complex tasks through simple interactions. In swarm intelligence, the collective behavior of decentralized, self-organized systems, natural or artificial, leads to intelligent outcomes.

Simple creatures following simple rules (like birds flocking) can display a surprising amount of complexity, efficiency, and even creativity. A flock of 400 birds, for example, needs just about half a second to turn.

Imagine being able to pivot an organization like that! These natural phenomena are leading to evolutions in robotics, data mining, medicine, and blockchains.

The roots of collective intelligence can be traced back to when collective decision-making was a cornerstone in societies like ancient Greece with their democratic forums and councils. The concept began to take a more formal shape in the late 19th century.

In 1906, British scientist Francis Galton, known for his work in statistics and heredity, visited a country fair in Plymouth where he observed a weight-judging competition involving a fat ox. Participants, including both experts like butchers and farmers and regular fair-goers, estimated the ox's weight for a small fee. The closest guesses winning prizes. Intrigued by this, Galton turned the event into an experiment to assess the average person's judgment. He collected the 787 submitted guesses, analyzed them statistically, and calculated the mean. Galton's intial assumption was that people's estimates would be way off. He was proven wrong. The crowd's average guess was 1,197 pounds, 1 pound off the actual weight of 1,198 pounds.

The study of collective intelligence expanded, particularly within the realms of organizational theory and psychology. Researchers began examining how groups work together, make decisions, and solve problems. The focus was on understanding how the collective intelligence of a group could exceed the sum of its parts.

One of the interesting traits that began to emerge was the importance of the diversity of people involved for groups to consistently produce "better" or more accurate results over individuals.

In May 1968, the U.S. submarine Scorpion vanished en route to Newport. The only thing the Navy knew was it's location since it last made radio contact and only the vaguest sense of how far it might have traveled over a vast and deep area of the ocean.

Naval officer John Craven rejected the conventional approach of consulting a few top experts. Instead, he put a diverse team of people together - mathematicians, submarine specialists, navy salvagement - and asked for their best guesses on what happened to the sub and where it ended up.

Craven incentivized people's bets with Chivas Regal as prizes. He used their varied estimates and applied Bayes' theorem, a method to revise probabilities based on new evidence, to determine the Scorpion's final location. The submarine was not in a spot that any one individual had picked.

But the location he came up with, using everyone's ideas, led to the submarine being discovered 220 yards from his prediction. Extraordinary, especially considering the minimal and vague information the group had to work with.

There have been many subsequent experiments showing how groups of people can consistently come up with better answers. One study showed that on the gameshow Who Wants To Be A Millionaire, experts used in a lifeline were right 65% of the time while studio audiences got the right answer 91% of the time.

Professor Hazel Knight showed that aggregate guesses of room temperatures could be as good as 0.4 degrees off, much closer than most individual guesses. Kate H. Gordon asked two hundred students to rank items by weight, and found the group’s estimate was 94% accurate, only 2% of individual guesses were closer.

Finance professor Jack Treynor ran the famous jelly beans in the jar experiment in his class with a jar that held 850 beans. The group estimate was 871. Out of hundreds of students, only one person's guess was closer that the group's average.

We ran our own experiment in a local high school, linked to an environmental project. We put a non-descript tank on display filled with gas and asked students two questions: how much gas would this tank hold, and how much CO2 would it produce if it was burned? (The latter of which you can calculate if you know the first mass).

The question is, who cares? How does guessing jellybeans in a jar help us with complex problems?

There are a number of more useful and practical ways that collective intelligence is used in different industries around the world:

Platforms like Innocentive allow organizations to post complex problems for a global community to solve, like the method to separate oil from water in frozen conditions.

Google collects and analyzes vast amounts of data from user searches, clicks, and browsing behavior. This data reflects the collective preferences and judgments of millions of users, contributing to the refinement of search algorithms.

LEGO uses its Ideas platform to crowdsource toy set ideas, with popular concepts being turned into products.

Ushahidi was used in the Haiti earthquake for real-time information collection, aiding in rescue and aid distribution.

eBird by the Cornell Lab of Ornithology uses birdwatchers' data for bird distribution and conservation efforts.

PatientsLikeMe allows patients to share experiences with treatments and symptoms, aiding disease understanding.

Platforms like eToro enable investors to share and discuss investment strategies, leading to informed decisions.

Open-source projects like Linux involve global developer contributions, driving innovation in software.

Wikiplanning enables community input on urban development projects, reflecting diverse needs and preferences.

Collective intelligence takes on a special nature in an organization. When people see their ideas helping shape a vision or a strategy, they tend to feel more invested in the outcomes. It creates a sense of belonging, of being important, of having a vested interest in the team's success.

That inclusion, in both the process and the solution, increases how much people care and how much they understand. Numerous theories and experiments demonstrate this. Our hierarchy of needs, self determination, social identity all show that human motivation and commitment are not just about monetary rewards or individual achievement. Motivation and commitment are significantly about social dynamics and personal fulfillment of these social needs.

People need and want to belong.

Strategies for solving complex problems are not jellybeans in a jar. Good strategies are essentially a decision - an agreement by an organization's leadership - on what a team is going to do in order to solve a particular problem or set of problems.

So if an inclusive process helps people solve problems because of their connection to the team and the solution - especially knowing success is a process, learning from failures, maing adjustments along the way - then some part of the benefit is not even tied to being right.

It's tied to being "in it together". That's the interesting thing about an inclusive or collective process. When done right, it organizes and connects people in a way that is a big, if not bigger, determinant of success, than being "right".

We see the same dynamic in team sports. Teams that win work well together, teammates have chemistry, play hard, everyne contributes. Even in basketball where star power has a huge impact, chemistry is essential to your team having success and success improves chemistry as teams learn how to win.

Of course you want both - a few superstars surrounded by a cohesive team working well together. You want depth and contribution through your whole lineup.

Not having that chemistry, not having everyone contributing, introduces multiplying risks. First, let's clarify that diversity and inclusion do not mean democracy, concensus or mob rule. Teams still have leaders - owners, coaches and captains, executives and managers. Businesses have high performers. Even in businesses a flat hierarchy, no positions of power, there are still thought leaders and project or product champions at one time or another.

It is ok to lead. It is ok to have the executive oversight that provides the process, the context, the guidelines, and makes the "executive" decisions. It is ok to be a champion with more experience or creative vision that drives things forward. What's important is that you listen. That there is a sense of fairness, psychological safety, clear pathways for people to participate.

And it's important to know that people - especially "experts" - can be the worst at knowing when they are wrong (especially when they are not listening to others). It's not specific to an expert or superstar. The downside of not listening just has more weight or impact when we're talking about experts or leaders with the power to make decisions.

There are a number of reasons why listening helps, or to put it another way, the risks we can mitigate by listening to others:

This takes us back to our question. If we have an organization full of diversity and inclusive processe and leaders that listen - how does that make the ideas, decisions and strategies better? What are the mechanics, the dynamics, behind why many ideas can lead to more successful outcomes?

If we make a comparison between AI and CI, thinking of both of those as a technology that needs many ideas to work, we can start piecing together an answer to how or why many ideas can make things better.

AI is a black box that spits out answers. Think about chatGPT. We type a simple prompt and get incredible and immediate results (usually). It's a "black box" because the process is not transparent or easily understandable, even though the input and output are visible. You cannot determine how the machine came up with an answer.

The algorithms consist of many layers and thousands or millions of parameters. You can't trace exactly how or why the model arrived at a specific decision or prediction. Unlike rule-based systems where decision logic is explicitly coded, machine learning models learn from data and develop their own rules that are not always clear to human observers. They are trained on vast amounts of data and process at inconceivable speeds.

chatGPT's illustration of it's own brain.

The human brain is also a sophisticated data-processing system. Throughout life, we continuously gather and store a vast array of information, experiences and emotional responses. When it comes to decision-making, our brains analyze its respective reservoir of accumulated data. Like AI, the specific pathways and influences leading to an idea are not always transparent. Unlike AI, we can probe our thoughts and memories and often "retrace our steps".

Here is where things get different - general intelligence. In the context of human cognition, this is the ability to apply knowledge and skills in a wide variety of situations. It encompasses a range of cognitive abilities, including reasoning, problem-solving, planning, abstract thinking, learning from experience, and adapting to new situations.

The AI coming into popular use today is still based on Large Language Models (LLMs) which is specialized and generally "domain-specific" - focused on a particular area like "health care" for example. chatGPT is a general-purpose language model based on the GPT (Generative Pre-trained Transformer) architecture. This means it is designed to perform a wide range of language tasks and is capable of generating text across various topics and styles.

LLMs are highly proficient, demonstrating abilities such as answering questions, writing in various styles, translating languages, and more. However, their capabilities are largely confined to the realm of language processing and are based on patterns learned from vast datasets of text.

LLMs generate responses based on patterns in the data they were trained on. They lack the ability to generate genuinely novel ideas or concepts that are not in some way derived from their training data. Human creativity often involves intuition, insight, and outright mistakes. We make "leaps of logic" and discover things based on complete accidents or strong, intuitive feelings. LLMs do not have any of this.

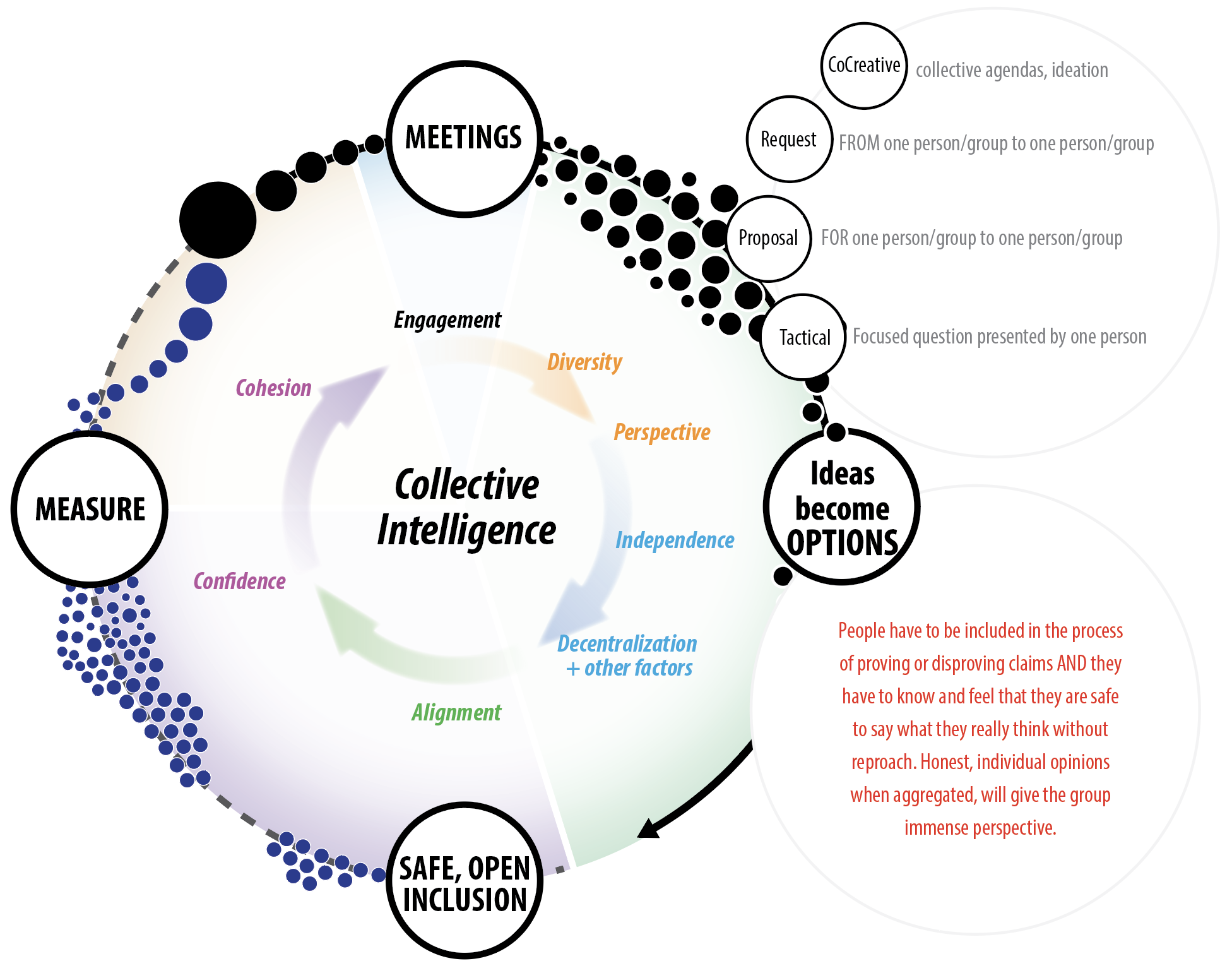

Collective intelligence is the layer or function that connects ideas from multiple people, multiple brains, to get answers to questions - just like prompts. And just like chatGPT - the answers we get are only as good as the questions we ask, the context we provide, and the dataset we are drawing from.

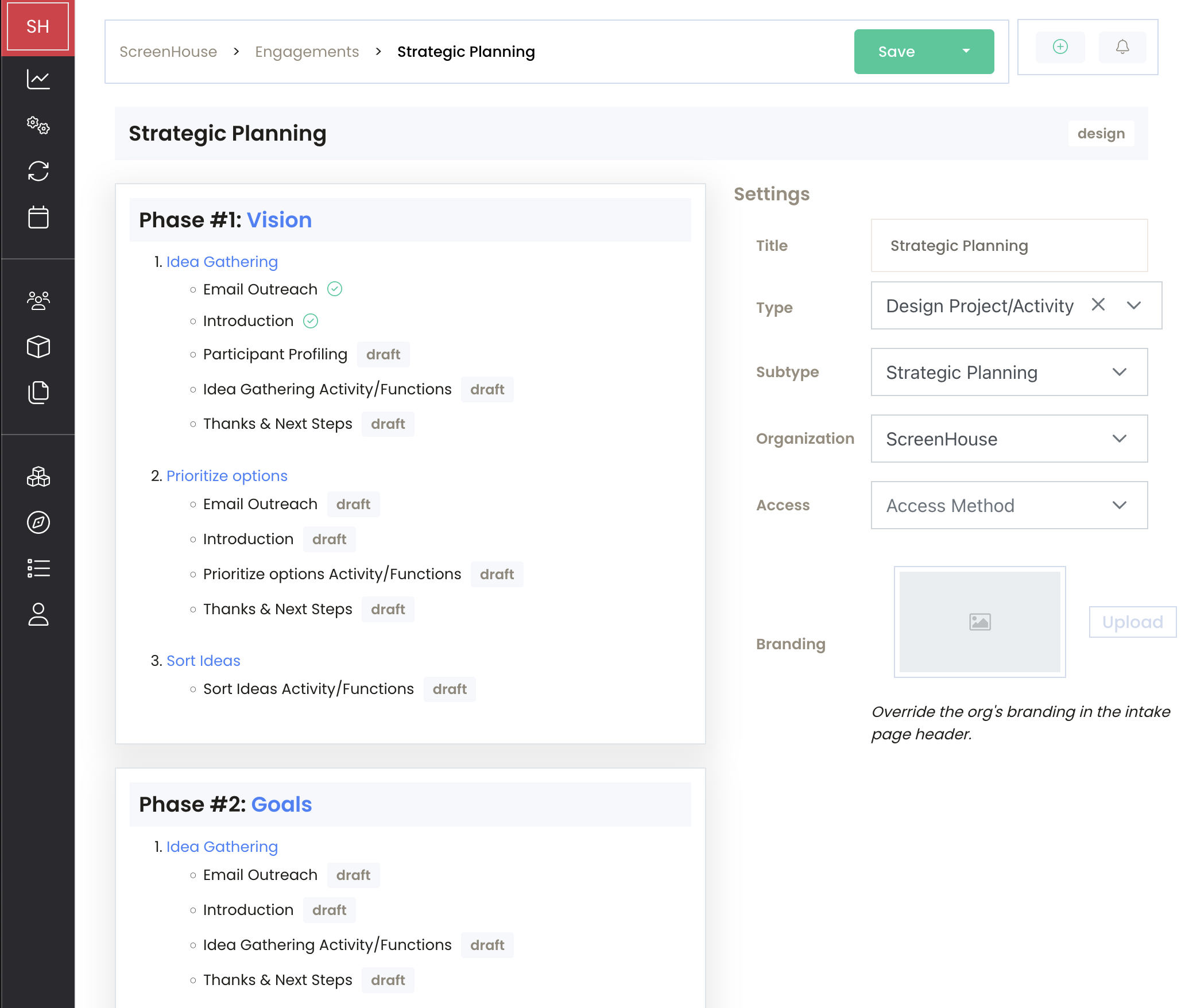

Building a repeatable, efficient and effective process for shaping individual ideas into tangible results.

The process starts by asking a question. With AI, the process is easy. You go to the website, type your question, and you get an answer. It's almost as easy with a person. You can turn to them (or pick up a phone, send a text message, write an email) and ask the same question.

With chatGPT, you probably have to spend time giving it some context, and you're not sure how creative or accurate the answer is going to be. And it's knowledge will stop at a certain date, so it might not be aware of the last few years. But the response will be articulate and tailored to whatever tone or persona you want.

With a person, assuming you didn't ask a complete stranger or a five year old, they'll probably understand the context of the question. And they'll be aware of what happened yesterday. They might come up with something completely out of the box. It might trigger a flash that sparks a quick echange of ideas. But you have no idea if that person is right either. And it might not be nearly as articulate, complete, or well researched as chatGPT. And you'll get personality and tone, which can change from day to day.

This process of engagement breaks down (or gets more difficult to do well) when you start including more people. Get five people in a room and you might have a nice discussion but it'll take an hour. Get twenty people in a room, and it would take hours to let every organinic conversation unfold. Which is why fifteen of them probably feel like they are wasting time listening to other people talk for an hour.

Get 200 people in a room, and you need three days, tens of thousands of dollars, thousands of human hours, and depending on what process you used, a lot of sticky notes that end up in spreadsheets that probably take weeks, or months, to turn into whatever. A strategic plan, a shared vision, etc.

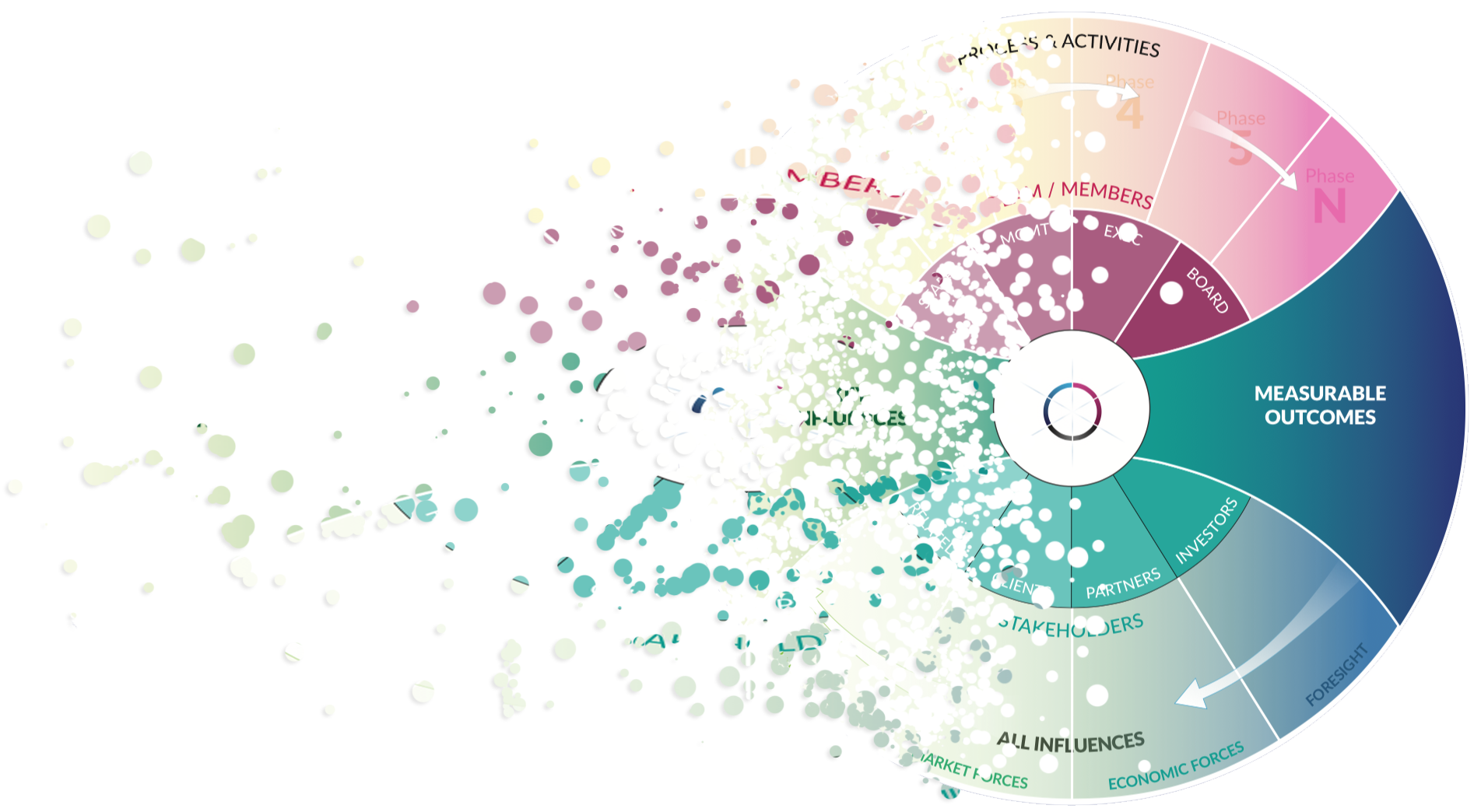

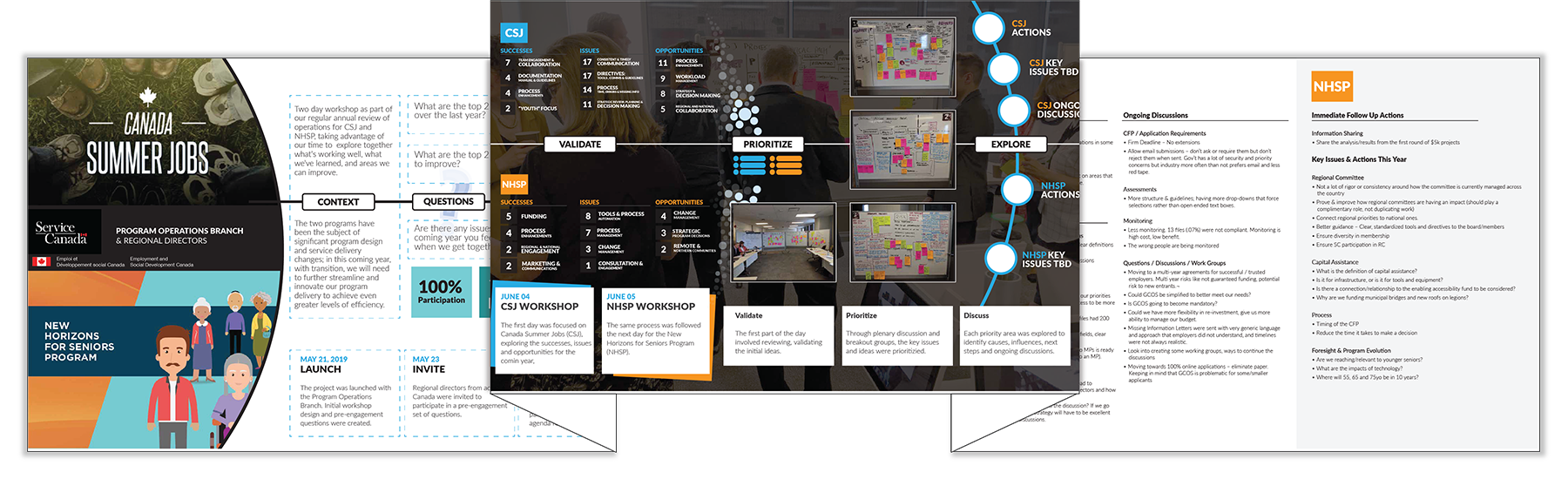

Tapestry of an engagement process. We've facilicated many, typically 2-3 months, 10k to 75k+ to deliver.

Imagine if chatGPT's response to your prompt was: Sure! Just give me three months and fifty thousand dollars and I'll get that answer for you.

Making collective intelligence more like AI means we have to create a system - automated processes, algorithms - to tap into individual ideas in a fast, efficient and effective way, even in large organizations. After 30+ years of running 'design thinking' engagements with business, Government, First Nations, and community/not-for-profit for organizations, we've developed a way of doing it that produces good results. It isn't the only way to run meetings and workshops of course, but it is repeatable - the same general design and delivery steps that produce consistent results.

First, we define "effective engagement" on the following metrics:

While it would be nice to track implementation results and success rates over time, this would be extremely difficult and cost prohibitive. Multi-year endeavours with hundreds of activities and changes driven by external forces are difficult to track and attribute to any one set of ideas or processes. The reality is people and organizations are actually quite bad at achieving their intended results. We're operating in a system where the vast majority of businesses are "mediocre" (more on that at the end of this article) so mediocre performance is normal. We set off on a course of action, solve problems and make changes along the way. Throw in a lot of extra time and people-hours, increases costs and take on debt, and maybe complete half of our projects which achieve some of the intended results. Then we continue on to the next product, program or strategic planning cycle.

Also, organizations don't tend to run duplicate processes to produce different plans, execute them in tandem, and compare results to see which one worked better. We can compare things like success rates of organizations with and without strategic plans, success rates of inclusive versus not inclusive organizations to get a high-level view of what makes great organizations and great successess work. But it's difficult to follow a completely scientific process when you're talking about starting or building a business.

In context of all of that, through our experience and research, we've identified the key components you need to run a repeatable process that is efficient and effective at shaping people's ideas into something tangible:

The last two, application and continuity, are two sides of the same coin. With AI, the black box is processing sets of data from static sources (web pages etc) at the request of whoever types the prompt. In CI, a person (usually a manager or some type of leader, a consultant or some type of process facilitator) is trying to get answers from individuals. In some cases it might a team working asynchronously in a flat hierarchy, but there's still a facilitator in the process (although it could be automated by technology).

Either way, the "application" and "continuity" components reflect the feedback or "reciprocity" either between the leadership and the team or within the team equally. It doesnt mean that everyone sees everything at the same time - again, it doesnt have to be a democracy or a consensus. But it does need to have an element of feedback or reciprocity. Without that, an "engaging" process breaks down into a one way survey that suffers from all of the drawbacks of experts who don't listen, processes that are not inclusive or fair.

The last thing you want to do is create a situation where you are asking people to take time away from actually getting work done, ask them to contrinute ideas to help your company make more money, without any kind of feedback, benefits, rewards or connection to the results.

Remember - motivation and commitment are not just about monetary rewards or individual achievement. Motivation and commitment are significantly about social dynamics and personal fulfillment - which comes in large part from being a part of something you helped create.

This isn't an argument against giving people more stake in your financial success - especially when their ideas and performance is helping to create it. It's a reminder of how valuable and important it is for people to be included and listened to. And it is the foundation of the logical connection to collective intelligence and why things like inclusion matters. Collective intelligence relies heavily on being able to access the "tacit knowledge and experience" of people in your company or community. This is something that can only be given voluntarily. You can ask (or require) people to peform certain duties to a certain level. You cannot own people's knowledge while it is still in their minds, you cannot require people to give you "all of their ideas" nor could you ever possibly know if they had.

Having access to people's unhindered and creative thinking is a crucial for collective intelligence. It is the foundation for why things like fairness and psychological safety matter, why they matter beyond the individual benefits, beyond the HR benefits. They are essential for creating a whole that is more than the sum of its parts.

The data model - putting the components together to create a system capable of generating collective intelligence.

Every process is the same, and every process is different. The important lesson is that successful meetings, workshops, any activity, starts with good design.

We write more about the specific details on good processes in our article on better meetings. The general process of engaging people is generally the same. But context matters - the people, the problem, the time and money constraints will all impose design requirements or restrictions you need to consider in order to run a successful process.

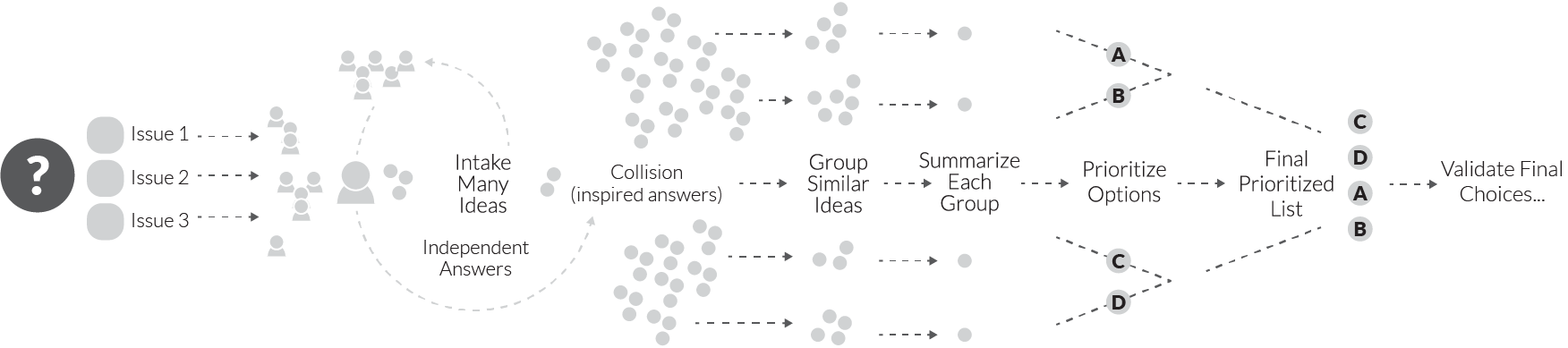

Shaping many ideas into a few good ideas is a multi-step process. There are a lot of features involved, so this is something we are going to unpack in a series of smaller articles.

It starts with collecting good ideas, which alone can require a paradigm shift in the way organizations are formed. Diversity, inclusion, psychological safety are a few of the key elements that need to be present to get the best possible results from the start.

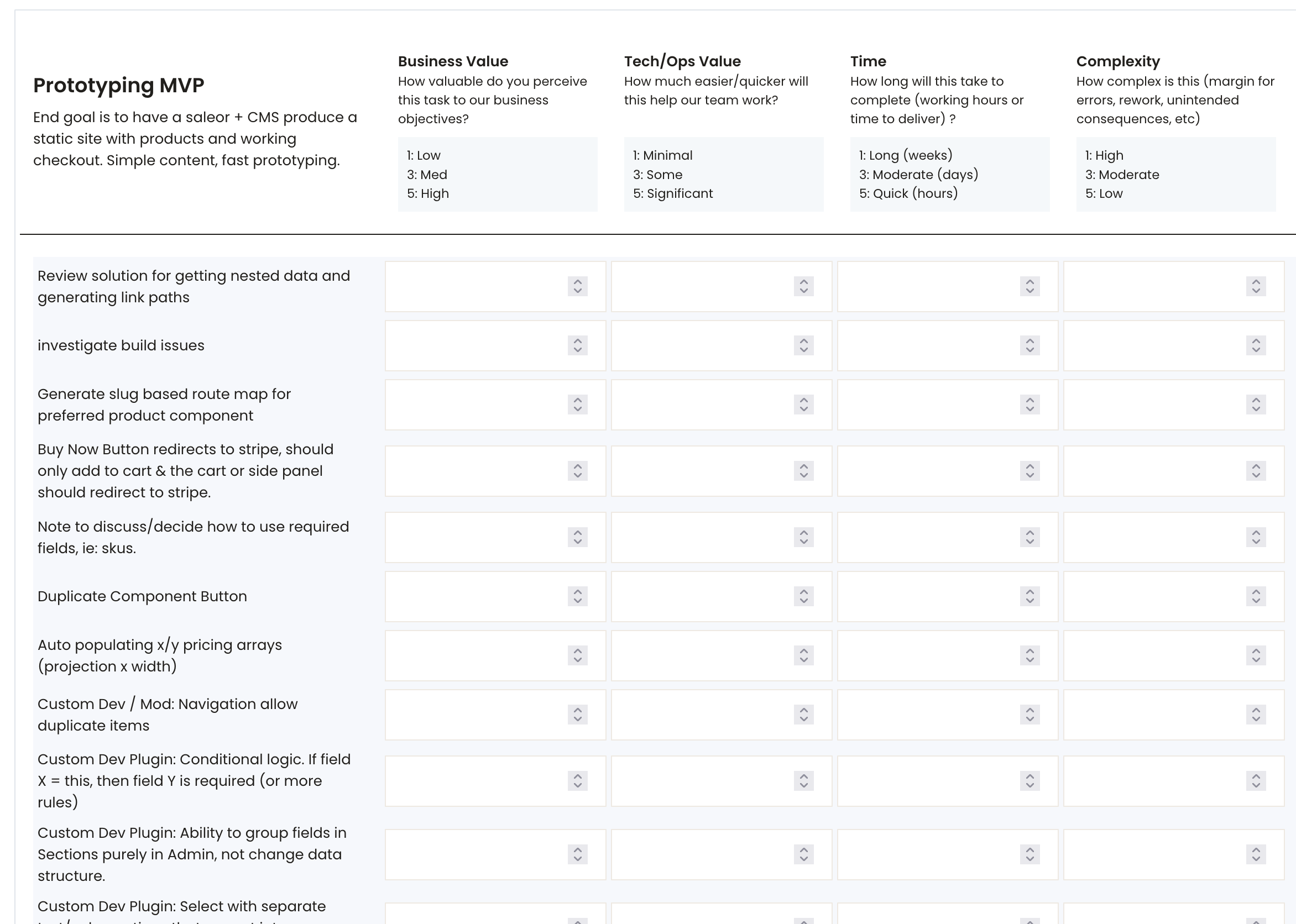

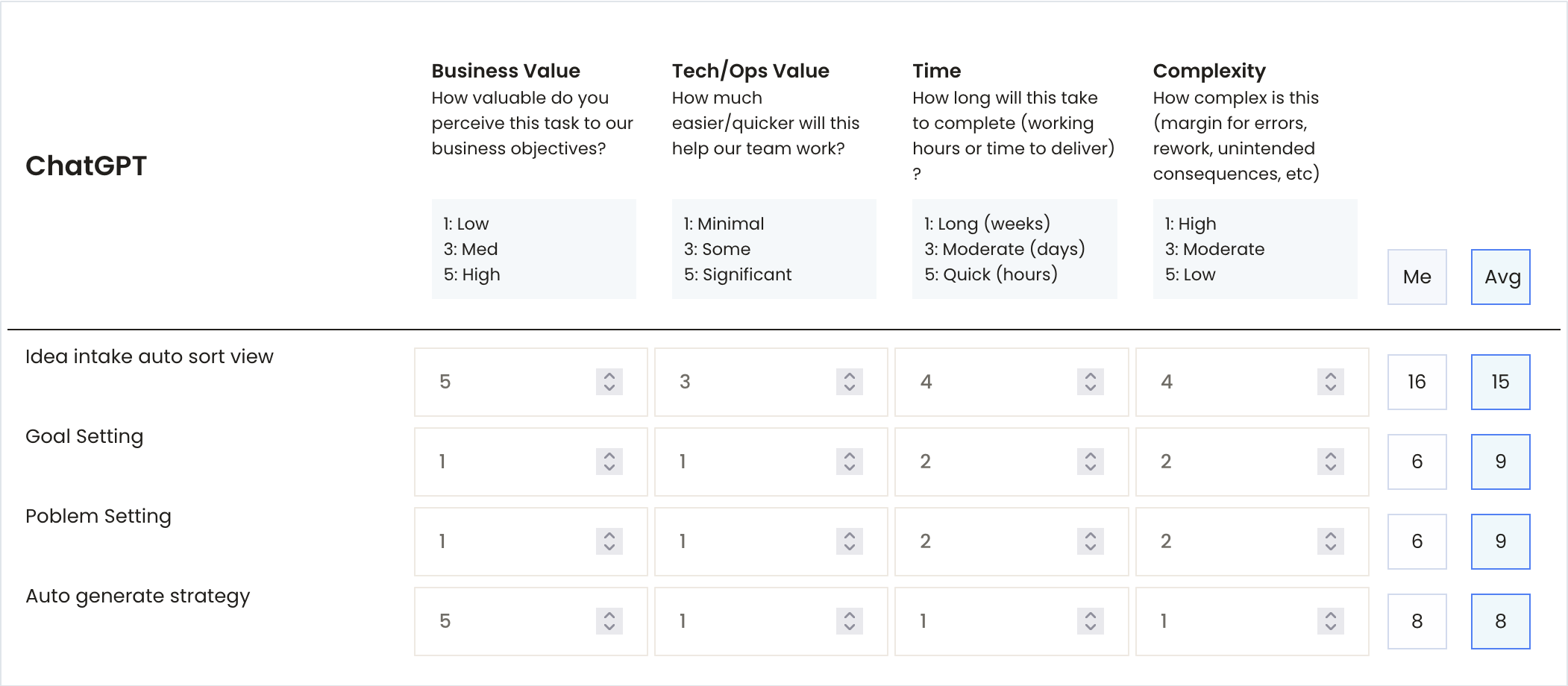

Part of the design process means asking powerful questions, good questions that elicit deep, critical thinking. All of this is just precursor to how you aggregate ideas. AI is incredibly helpful here, performing what's called "topic modelling" as an initial step that expedites the process of making meaning from many ideas.

Going from many ideas to a few good ideas - the best ideas that clearly articulate a problem, that become a coherent and cohesive set of priorities for addressing that problem - is an iterative process that can happen in many different ways.

Often, it is the iterative exchange of ideas that triggers an aha moment where an emergent idea takes shape. And outliers can be the adjacent ideas that create the leaps of logic AI isn't capable of.

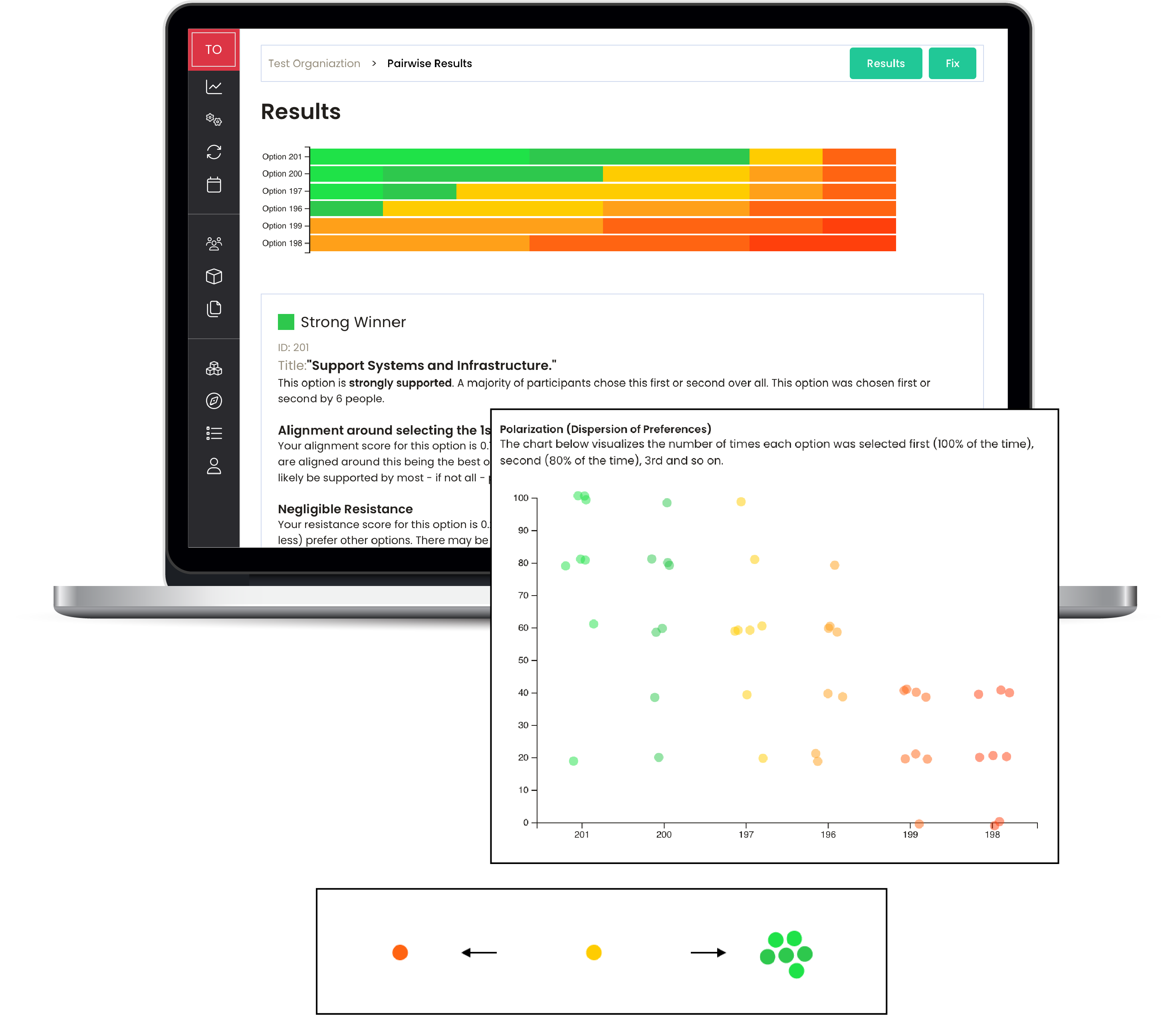

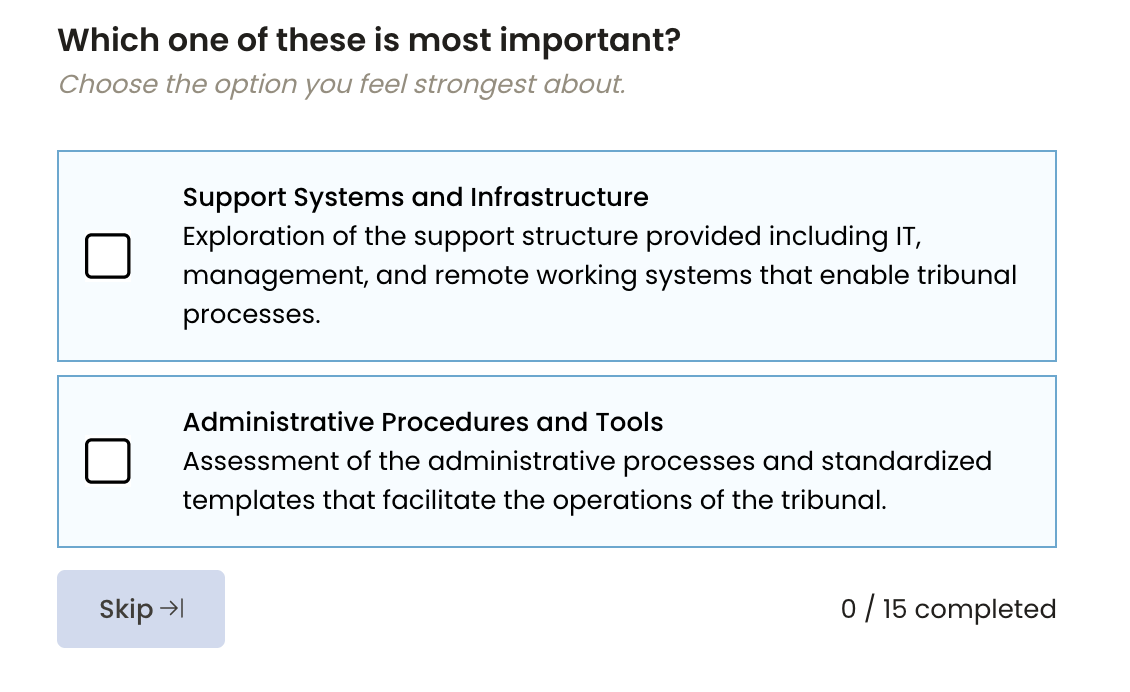

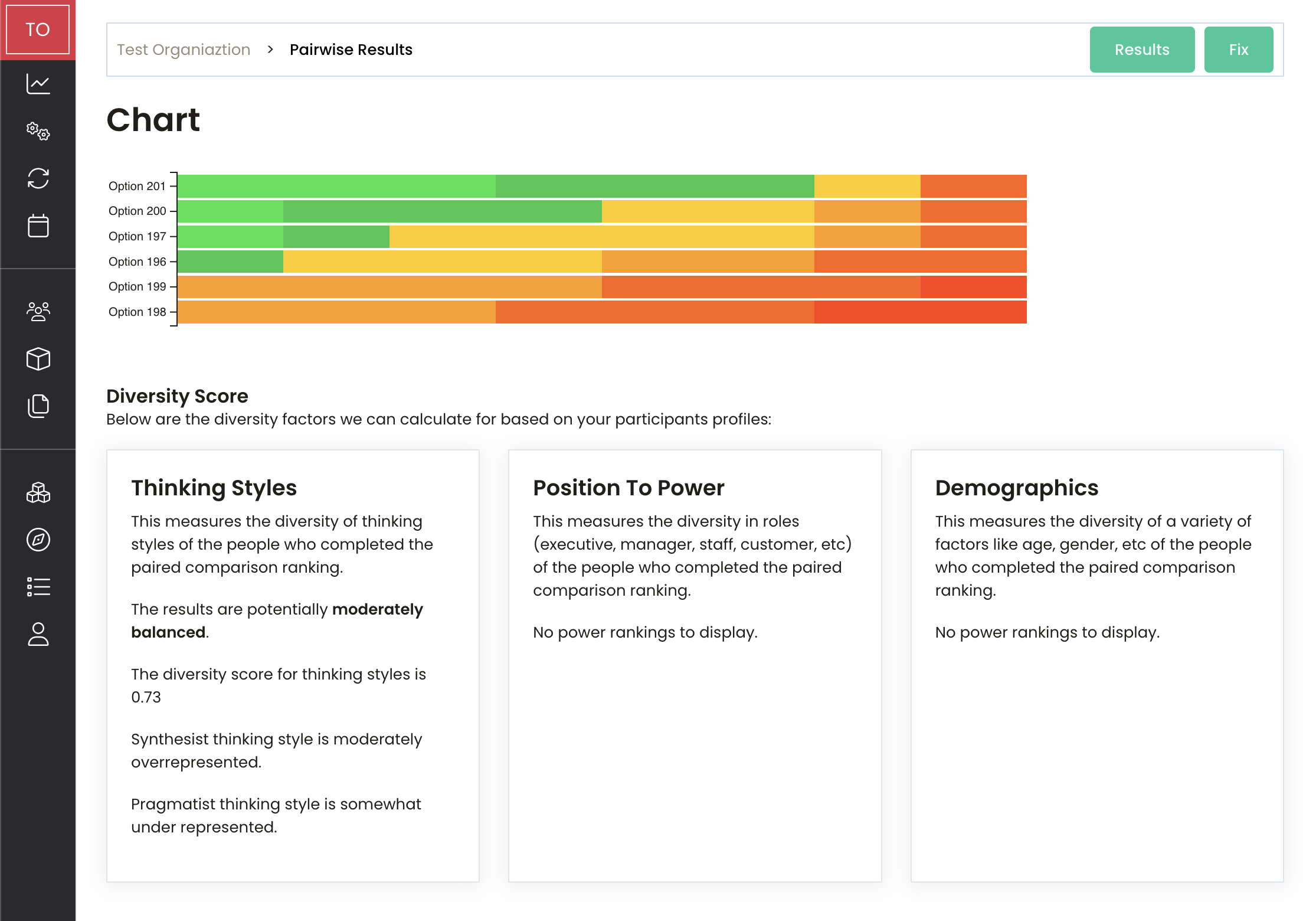

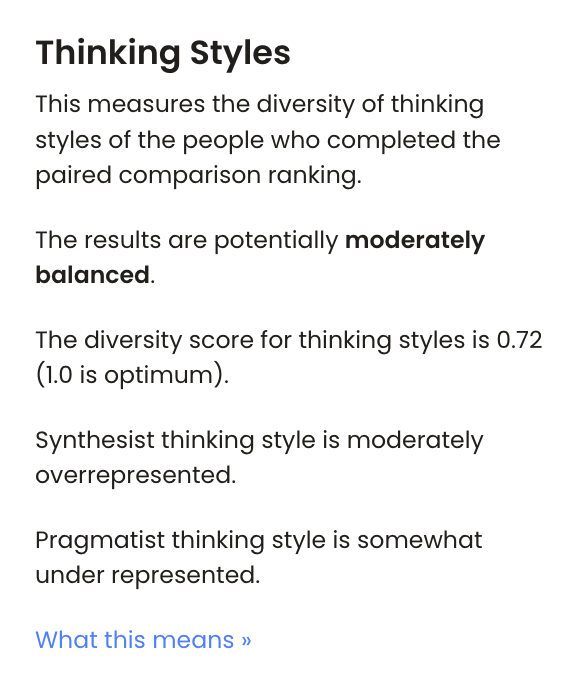

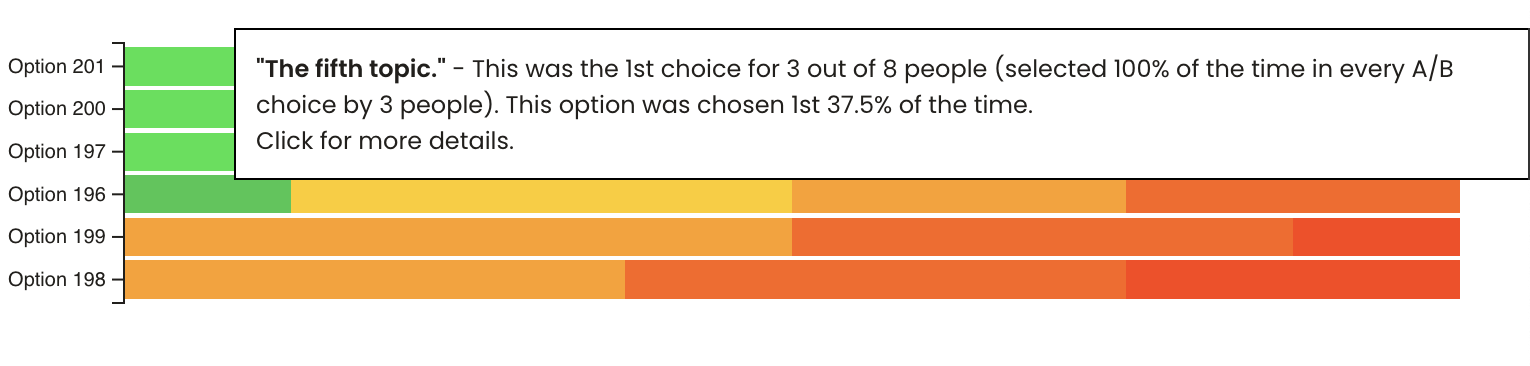

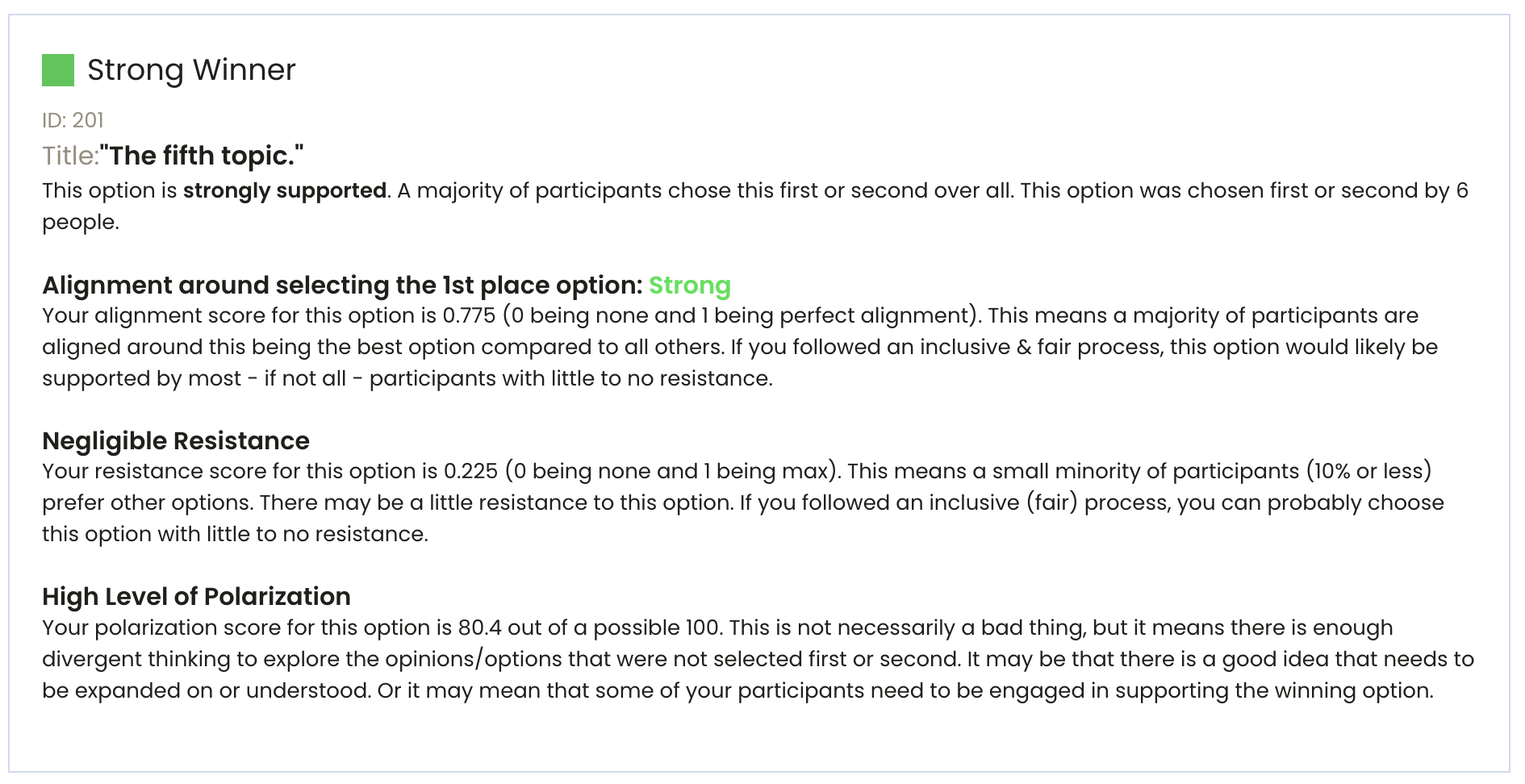

People are not great at comparing options when the options contain a lot of detail or complex factors. Processes like paired comparison can help taps into the unique aspects of human intuition, where we are able to make quick, more intuitive choices between complex options and produce results that reflect our true preferences.

When you put all of this together, from intake to aggregation and prioritization - through an iterative process founded on principles of inclusion and psychological safety to name a few, the result is a very powerful process and very valuable, or "intelligent" output.

We've spent the last last decade (since 2013) learning from various business processes to develop the data model and parameters that make collective intelligence a repeatable and measurable property of an organization. Diversity, inclusion, psychological safety, independence, perspective, decentralization, coherence, cohesion, alignment, resistance - these are all "features" we use to process data, similar to the features you define to train an AI on a dataset.

We've touched on a few of these throughout this article - diversity, inclusion and psychological safety. We've outlined some of the more direct benefits to individuals and HR. We've pointed out that while the majority of articles, stories and political mandates beg the question on their value to innovation (a logical fallacy in which an argument's premises assume the truth of the conclusion).

We'll be publishing articles focused on each of these, drawing the connections to how critical and valuable each of these are to creating "smarter" organizations that are more capable of unleashing human potential and achieving their intended results.

We started this article by stating a central, or narrow, definition of collective intelligence as an "emergent property" between people, a way of processing ideas (data) that generates aggregate or emergent ideas. It "arises" from individual interactions.

When you take the big picture into perspective - the importance of things like inclusivity and pyschological safety, and add the idea of a continuous feedback cycle between individuals and "the whole", this turns out to be an accurate definition.

This is essentially a "learning organization", conceptualized by Peter Senge. This is an entity where people continually learning to "see the whole together". Part of this is being able to articulate or visualize the system an organization is operating in - something we haven't explored in this article but we do expand on it in our systems article and our visual thinking articles. The other part of this is, as we explored briefly in our the section on collective aspiration and the special nature collective intelligence takes on when it comes to people trying to achieve something together.

Continuous engagement - learning and designing - is an ongoing process embedded in the culture. It's not just about solving problems but also about developing systems thinking and seeing interrelationships and patterns of change rather than static snapshots. People are working together synergistically to enhance an organization's adaptability, responsiveness, and creativity.

As is the case with systems thinking, this article is just the tip of the iceburg of the collective intelligence story. We've got research, businesses in practice, and ongoing experiments that we'll be turning into articles and other resources over the coming months and years. So check back to see updates to this article and others as they go live. Thanks for reading!